How we launched docs.github.com

ICYMI: docs.github.com is the new place to discover all of GitHub’s product documentation! We recently completed a major overhaul of GitHub’s documentation websites. When you visit docs.github.com today, you’ll see…

ICYMI: docs.github.com is the new place to discover all of GitHub’s product documentation!

We recently completed a major overhaul of GitHub’s documentation websites. When you visit docs.github.com today, you’ll see content from the former help.github.com and developer.github.com sites in a unified experience.

Our engineering goals were two-fold: 1) improve the reading and navigation experience for GitHub’s users; 2) improve the suite of tools that GitHub’s writers use to create and publish documentation.

Combining the content was the last of several complex projects we completed to reach these goals. Here’s the story behind this years-long effort, undertaken in collaboration with GitHub’s Product Documentation team and many other contributors.

A brief history of the docs sites

Providing separate docs sites for different audiences was the right choice for us for many years. But our plans evolved along with GitHub’s products. Over time, we aspired to help an international audience use GitHub by:

- Offering multi-language support for all content

- Scaling docs for new products

- Autogenerating API reference docs

- Providing interactive experiences

- Allowing anyone to easily contribute documentation

We couldn’t do these things when we had two static sites, each with its own codebase, its own way of organizing content, and its own markup conventions. Efforts were made to streamline the tooling over the years, but they were limited by the nature of static builds.

To achieve our goals, we determined we needed to write a custom dynamic backend, and eventually, combine the content.

Only fixing what was broken

Our docs sites were previously hosted on GitHub Pages using Jekyll practices: a content directory full of Markdown files and a data directory full of YAML files. This is a great setup for simple sites, and it worked for us for a long time. Although we outgrew Jekyll tooling, the writing conventions based in Markdown and YAML worked well. So we kept them, and we built the dynamic site around them.

Keeping these conventions let us alleviate pain points in the tooling without introducing a new paradigm for technical writing and asking writers to learn it. It also meant that writers could continue publishing content that helps people use GitHub in the old system while we built the new one.

What was broken?

We outgrew static site tooling for a number of reasons. A big factor was the complexity of versioning content for our GitHub Enterprise Server product.

We release a new version of GitHub Enterprise Server every three months, and we support docs for each version for one year before we deprecate them. At any time, we provide docs for four versions of GitHub Enterprise Server.

We handle this complexity by single-sourcing our docs. This means we provide multiple versions of each article, and a dropdown on the site lets users switch between versions. Here’s how it looked in the old help.github.com:

Versioning can be hard. Some articles are available in all versions. Some are GitHub.com-only. Some are Enterprise Server-only. Some have lots of internal conditionals, where a single paragraph or even a word may be relevant in some versions but not others. We also have workflows and tools for releasing new versions and deprecating old versions.

What does single-sourcing look like under the hood? Writers use the Liquid templating language (another Jekyll holdover) to render version-specific content using if / else statements:

{% if page.version == 'dotcom' or page.version ver_gt '2.20' %}

Content relevant to new versions

{% else %}

Content relevant to old versions

{% endif %}Statements like this are all over the content and data files.

Static site generators are designed to do one build. They don’t build multiple versions of pages. To support our single-source approach in the Jekyll days, we had to create a backport process, in which writers would build Enterprise Server versions separately from building GitHub.com docs. Backport pull requests had to be reviewed, deployed to staging, and published as a separate process. Over the years, as we released new Enterprise Server versions, the tooling started to fray around the edges. Backports took a long time to build, did weird things, or got forgotten entirely. Ultimately, backports became a liability.

Launching a dynamic help.github.com

When we set out to create a new dynamic site, we started with help.github.com. We built it over six months and carefully coordinated with the writers to swap out the backend, while mostly leaving the content alone. In February 2019, we launched the new Node.js site backed by Express. On the frontend, there is just vanilla JavaScript and CSS using Primer.

It was a big improvement:

- No more build

- The app loads metadata for all pages at server startup, but the contents are rendered dynamically at page load.

- Shaved ~10 minutes off deploy times.

- Dynamic version rendering

- No more backports! Enterprise Server content is loaded at the same time as everything else.

- Fastly CDN

- Serves as a global edge cache to keep things fast.

- Better search using Algolia

- Less chatops, more GitHub flow

- Staging and production deployments happen automatically.

Internationalized docs

Within a few months, the dynamic backend allowed us to reach our next major milestone: internationalization. We launched the Japanese and simplified Chinese versions of the site in June 2019 and added support for Spanish and Portuguese by the end of the year. (Look for a deep dive post into the internationalization process coming soon!)

This was progress. But developer.github.com was still running on the old static build, and parts of it were starting to break down. We needed to bring the developer content into the new codebase.

Supporting multiple products

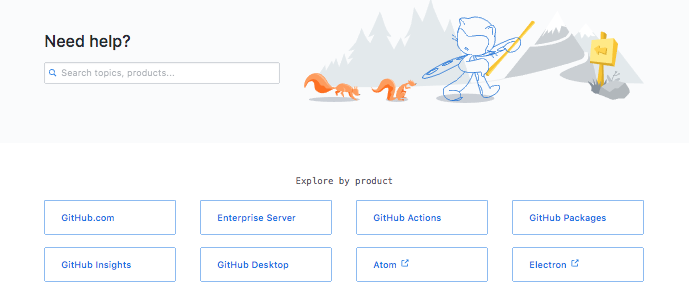

First, we needed to more robustly support the idea of products.

When we originally launched the new help site, the homepage did allow users to choose a product:

But the content for these products was organized in wildly different ways. For example, a directory called

But the content for these products was organized in wildly different ways. For example, a directory called content/dotcom/articles contained nearly a thousand Markdown files with no hierarchy. URLs looked like help.github.com/articles/<article>, with no indication of which product they belonged to. Writers and contributors had a hard time navigating the repository. It all “worked,” but it wouldn’t scale.

So we created a new product-centric structure that would be consistent across the site: content/<product>/<category>/<article>, with URLs that matched. To support this change, we developed a new TOC system and refactored product handling on the backend. Once again, we coordinated the changes with writers who were still actively writing, and once again, we left the core Jekyll conventions untouched. We also added support for redirects from the legacy article URLs to the new product-based ones.

In 2019, we released GitHub Actions as the first new product on the help site.

With a more scalable content organization in place, we were ready to start thinking about how to get developer content into the codebase.

Autogenerating API documentation

Historically, developer.github.com hosted documentation for integrators, including docs for GitHub’s two APIs: REST and GraphQL.

REST docs via openAPI

From the time GitHub’s REST docs were first released roughly a decade ago, they were handwritten and updated by humans using unstructured formats. This workflow was sustainable at first, but as the API grew, it became a big drain on writers’ time. Updating REST input and response parameters manually was hard enough, but versioning them for GitHub.com and Enterprise Server was almost impossibly complex. Readers had long asked for code samples and other standard features of API documentation we were unable to provide. We’d dreamed of autogenerating REST docs from a structured schema, but this seemed unattainable for years.

With the new codebase in place, it opened the door for us to think about autogeneration for real. And we happened into some lucky timing.

Octokit maintainer Gregor Martynus had already started the process of generating an OpenAPI schema that described how GitHub’s API works. This schema happened to be exactly what we needed. Rather than reinventing the wheel, we invested in that existing schema effort and enlisted the services of Redoc.ly, a small firm that specializes in OpenAPI schema design and implementation. We worked with them to get the work-in-progress OpenAPI over the finish line and ready for production use, and created a pipeline to consume and render the docs from OpenAPI.

Check out the new REST docs: http://docs.github.com/rest/reference

GraphQL docs

GraphQL is a different story from REST. Since GitHub first released its GraphQL API in 2017, we’ve had a pipeline for autogenerating docs from a schema using https://github.com/gjtorikian/graphql-docs.

This tooling worked well, but it was written in Ruby, and with the new Node.js backend, we needed something more JavaScript-friendly. We looked for existing JavaScript GraphQL docs generators but didn’t find any that fit our specific needs. So we rolled our own.

We wrote a script that takes a GraphQL schema as input, does some sanitization, and outputs JSON files containing only the data needed for rendering documentation. Our HTML files loop over that JSON data and render it on page load.

The script runs via a scheduled GitHub Actions workflow and automatically opens and merges PRs with the updates. This means writers never have to touch GraphQL documentation; it publishes itself.

Check out the new GraphQL docs: http://docs.github.com/graphql/reference

Scripting the content migration

In addition to API docs, developer.github.com contained content about GitHub and OAuth apps, GitHub Marketplace, and webhooks. The majority of this content is vanilla Markdown, so the project requirements were (finally) straightforward: import the files, process them, run tests. We wrote scripts to do this in a repeatable process.

The content strategist on the docs team created a comprehensive spreadsheet that mapped all the old developer content to their new product-based locations, with titles and intros for each. This formed the basis of our scripted efforts. We ran the scripts several times, doing reviews and making changes each time, before unveiling the final documentation.

Check out the new docs: http://docs.github.com/developers

Redirecting all the things

Getting a 404 on a documentation site is an abrupt end to a learning experience. GitHub’s docs team makes a commitment to prevent 404s when content files get renamed or moved around. There are a number of ways we support 20,000+ redirects in the codebase, and they can get complex.

For example, if you go to an Enterprise URL without a version, such as https://docs.github.com/enterprise, the site redirects you to the latest version by injecting the number in the URL. But we have to be careful – what if the URL happens to include enterprise somewhere but is not a real Enterprise Server path? No number should be injected in those cases.

We also redirect any URL without a language code to use the /en prefix. And we have special link rewriting under the hood to make sure that if you are on a Japanese page, all links to other GitHub articles take you to /ja versions of those pages instead of /en versions.

Soon we will enable blanket redirects to point most https://developer.github.com links to https://docs.github.com. That step won’t be too hard, but in the course of the migration, we changed the names and locations of much of the developer content. For example, /v3 became /rest/reference, /apps became /developers/apps, and so on. To support all these redirects, we worked from a list of the top few hundred developer.github.com URLs from Google Analytics to whittle down dead links, path by path.

These redirects will help GitHub’s users arrive at the content they want when they navigate docs.github.com or follow legacy bookmarks or links.

What’s next

This is an exciting time for content at GitHub. With the foundation we built for docs.github.com, we can’t wait to continue improving the experience for people creating and using GitHub’s content. Keep an eye out for more behind-the-scenes posts about docs.github.com!

Tags:

Written by

Related posts

How GitHub engineers tackle platform problems

Our best practices for quickly identifying, resolving, and preventing issues at scale.

GitHub Issues search now supports nested queries and boolean operators: Here’s how we (re)built it

Plus, considerations in updating one of GitHub’s oldest and most heavily used features.

Design system annotations, part 2: Advanced methods of annotating components

How to build custom annotations for your design system components or use Figma’s Code Connect to help capture important accessibility details before development.