A brief history of code search at GitHub

This blog post tells the story of why we built a new search engine optimized for code.

We recently launched a technology preview for the next-generation code search we have been building. If you haven’t signed up already, go ahead and do it now!

We want to share more about our work on code exploration, navigation, search, and developer productivity. Recently, we substantially improved the precision of our code navigation for Python, and open-sourced the tools we developed for this. The stack graph formalism we developed will form the basis for precise code navigation support for more languages, and will even allow us to empower language communities to build and improve support for their own languages, similarly to how we accept contributions to github/linguist to expand GitHub’s syntax highlighting capabilities.

This blog post is part of the same series, and tells the story of why we built a new search engine optimized for code over the past 18 months. What challenges did we set ourselves? What is the historical context, and why could we not continue to build on off-the-shelf solutions? Read on to find out.

What’s our goal?

We set out to provide an experience that could become an integral part of every developer’s workflow. This has imposed hard constraints on the features, performance, and scalability of the system we’re building. In particular:

- Searching code is different: many standard techniques (like stemming and tokenization) are at odds with the kind of searches we want to support for source code. Identifier names and punctuation matter. We need to be able to match substrings, not just whole “words”. Specialized queries can require wildcards or even regular expressions. In addition, scoring heuristics tuned for natural language and web pages do not work well for source code.

- The scale of the corpus size: GitHub hosts over 200 million repositories, with over 61 million repositories created in the past year. We aim to support global queries across all of them, now and in the foreseeable future.

- The rate of change: over 170 million pull requests were merged in the past year, and this does not even account for code pushed directly to a branch. We would like our index to reflect the updated state of a repository within a few minutes of a push event.

- Search performance and latency: developers want their tools to be blazingly fast, and if we want to become part of every developer’s workflow we have to satisfy that expectation. Despite the scale of our index, we want p95 query times to be (well) under a second. Most user queries, or queries scoped to a set of repositories or organizations, should be much faster than that.

Over the years, GitHub has leveraged several off-the-shelf solutions, but as the requirements evolved over time, and the scale problem became ever more daunting, we became convinced that we had to build a bespoke search engine for code to achieve our objectives.

The early years

In the beginning, GitHub announced support for code search, as you might expect from a website with the tagline of “Social Code Hosting.” And all was well.

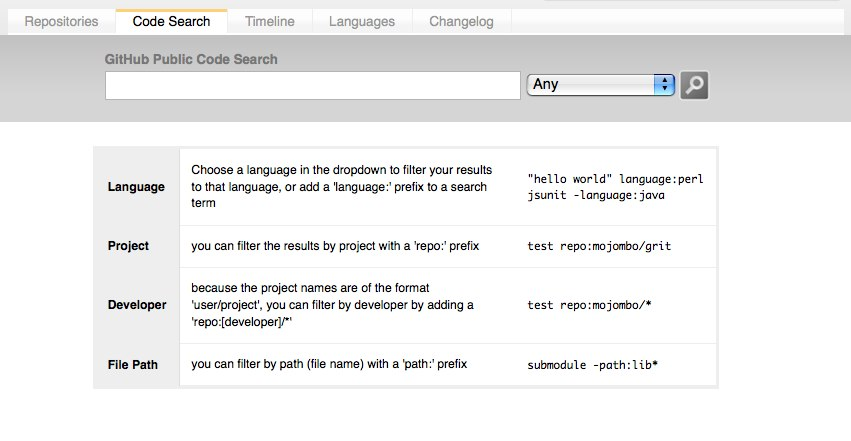

Except… you might note the disclaimer “GitHub Public Code Search.” This first iteration of global search worked by indexing all public documents into a Solr instance, which determined the results you got. While this nicely side-steps visibility and authorization concerns (everything is public!), not allowing private repositories to be searched would be a major functionality gap. The solution?

Image credit: Patrick Linskey on Stack Overflow

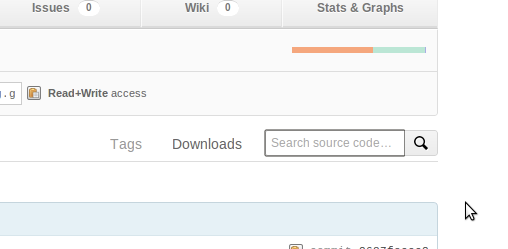

The repository page showed a “Search source code” field. For public repos, this was still backed by the Solr index, scoped to the active repository. For private repos, it shelled out to git grep.

Quite soon after shipping this, the then-in-beta Google Code Search began crawling public repositories on GitHub too, thus giving developers an alternative way of searching them. (Ultimately, Google Code Search was discontinued a few years later, though Russ Cox’s excellent blog post on how it worked remains a great source of inspiration for successor projects.)

Unfortunately, the different search experience for public and private repositories proved pretty confusing in practice. In addition, while git grep is a widely understood gold standard for how to search the contents of a Git repository, it operates without a dedicated index and hence works by scanning each document—taking time proportional to the size of the repository. This could lead to resource exhaustion on the Git hosts, and to an unresponsive web page, making it necessary to introduce timeouts. Large private repositories remained unsearchable.

Scaling with Elasticsearch

By 2010, the search landscape was seeing considerable upheaval. Solr joined Lucene as a subproject, and Elasticsearch sprang up as a great way of building and scaling on top of Lucene. While Elasticsearch wouldn’t hit a 1.0.0 release until February 2014, GitHub started experimenting with adopting it in 2011. An initial tentative experiment that indexed gists into Elasticsearch to make them searchable showed great promise, and before long it was clear that this was the future for all search on GitHub, including code search.

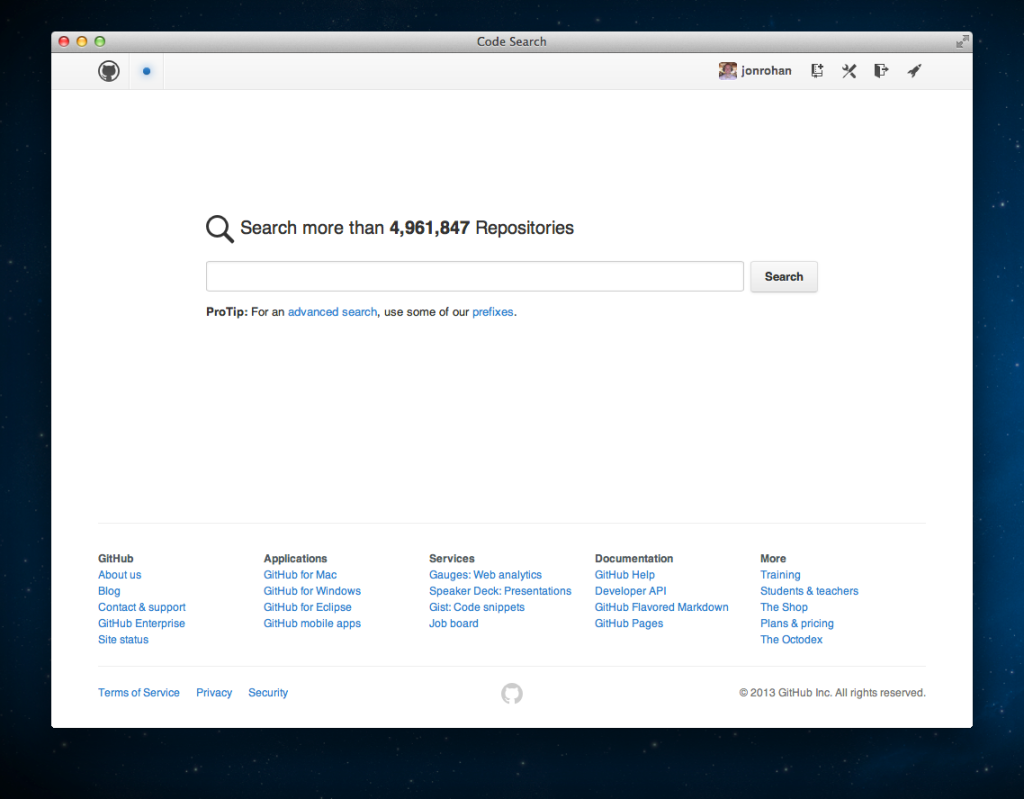

Indeed in early 2013, just as Google Code Search was winding down, GitHub launched a whole new code search backed by an Elasticsearch cluster, consolidating the search experience for public and private repositories and updating the design. The search index covered almost five million repositories at launch.

The scale of operations was definitely challenging, and within days or weeks of the launch GitHub experienced its first code search outages. The postmortem blog post is quite interesting on several levels, and it gives a glimpse of the cluster size (26 storage nodes with 2 TB of SSD storage each), utilization (67% of storage used), environment (Elasticsearch 0.19.9 and 0.20.2, Java 6 and 7), and indexing complexity (several months to backfill all repository data). Several bugs in Elasticsearch were identified and fixed, allowing GitHub to resume operations on the code search service.

In November 2013, Elasticsearch published a case study on GitHub’s code search cluster, again including some interesting data on scale. By that point, GitHub was indexing eight million repositories and responding to 5 search requests per second on average.

In general, our experience working with Elasticsearch has been truly excellent. It powers all kinds of search on GitHub.com, doing an excellent job throughout. The code search index is by far the largest cluster we operate , and it has grown in scale by another 20-40x since the case study (to 162 nodes, comprising 5184 vCPUs, 40TB of RAM, and 1.25PB of backing storage, supporting a query load of 200 requests per second on average and indexing over 53 billion source files). It is a testament to the capabilities of Elasticsearch that we have got this far with essentially an off-the-shelf search engine.

My code is not a novel

Elasticsearch excelled at most search workloads, but almost immediately some wrinkles and friction started cropping up in connection with code search. Perhaps the most widely observed is this comment from the code search documentation:

You can’t use the following wildcard characters as part of your search query:

. , : ; / \ ` ' " = * ! ? # $ & + ^ | ~ < > ( ) { } [ ] @. The search will simply ignore these symbols.

Source code is not like normal text, and those “punctuation” characters actually matter. So why are they ignored by GitHub’s production code search? It comes down to how our ingest pipeline for Elasticsearch is configured.

Click here to read the full details

When documents are added to an Elasticsearch index, they are passed through a process called text analysis, which converts unstructured text into a structured format optimized for search. Commonly, text analysis is configured to normalize away details that don’t matter to search (for example, case folding the document to provide case-insensitive matches, or compressing runs of whitespace into one, or stemming words so that searching for “ingestion” also finds “ingest pipeline”). Ultimately, it performs tokenization, splitting the normalized input document into a list of tokens whose occurrence should be indexed.

Many features and defaults available to text analysis are geared towards indexing natural-language text. To create an index for source code, we defined a custom text analyzer, applying a carefully selected set of normalizations (for example, case-folding and compressing whitespace make sense, but stemming does not). Then, we configured a custom pattern tokenizer, splitting the document using the following regular expression: %q_[.,:;/\\\\`'"=*!@?#$&+^|~<>(){}\[\]\s]_. If you look closely, you’ll recognise the list of characters that are ignored in your query string!

The tokens resulting from this split then undergo a final round of splitting, extracting word parts delimited in CamelCase and snake_case as additional tokens to make them searchable. To illustrate, suppose we are ingesting a document containing this declaration: pub fn pthread_getname_np(tid: ::pthread_t, name: *mut ::c_char, len: ::size_t) -> ::c_int;. Our text analysis phase would pass the following list of tokens to Elasticsearch to index: pub fn pthread_getname_np pthread getname np tid pthread_t pthread t name mut c_char c char len size_t size t c_int c int. The special characters simply do not figure in the index; instead, the focus is on words recovered from identifiers and keywords.

Designing a text analyzer is tricky, and involves hard trade-offs between index size and performance on the one hand, and the types of queries that can be answered on the other. The approach described above was the result of careful experimentation with different strategies, and represented a good compromise that has allowed us to launch and evolve code search for almost a decade.

Another consideration for source code is substring matching. Suppose that I want to find out how to get the name of a thread in Rust, and I vaguely remember the function is called something like thread_getname. Searching for thread_getname org:rust-lang will give no results on our Elasticsearch index; meanwhile, if I cloned rust-lang/libc locally and used git grep, I would instantly find pthread_getname_np. More generally, power users reach for regular expression searches almost immediately.

The earliest internal discussions of this that I can find date to October 2012, more than a year before the public release of Elasticsearch-based code search. We considered various ways of refining the Elasticsearch tokenization (in fact, we turn pthread_getname_np into the tokens pthread, getname, np, and pthread_getname_np—if I had searched for pthread getname rather than thread_getname, I would have found the definition of pthread_getname_np). We also evaluated trigram tokenization as described by Russ Cox. Our conclusion was summarized by a GitHub employee as follows:

The trigram tokenization strategy is very powerful. It will yield wonderful search results at the cost of search time and index size. This is the approach I would like to take, but there is work to be done to ensure we can scale the ElasticSearch cluster to meet the needs of this strategy.

Given the initial scale of the Elasticsearch cluster mentioned above, it wasn’t viable to substantially increase storage and CPU requirements at the time, and so we launched with a best-effort tokenization tuned for code identifiers.

Over the years, we kept coming back to this discussion. One promising idea for supporting special characters, inspired by some conversations with Elasticsearch experts at Elasticon 2016, was to use a Lucene tokenizer pattern that split code on runs of whitespace, but also on transitions from word characters to non-word characters (crucially, using lookahead/lookbehind assertions, without consuming any characters in this case; this would create a token for each special character). This would allow a search for ”answer >= 42” to find the source text answer >= 42 (disregarding whitespace, but including the comparison). Experiments showed this approach took 43-100% longer to index code, and produced an index that was 18-28% larger than the baseline. Query performance also suffered: at best, it was as fast as the baseline, but some queries (especially those that used special characters, or otherwise split into many tokens) were up to 4x slower. In the end, a typical query slowdown of 2.1x seemed like too high a price to pay.

By 2019, we had made significant investments in scaling our Elasticsearch cluster simply to keep up with the organic growth of the underlying code corpus. This gave us some performance headroom, and at GitHub Universe 2019 we felt confident enough to announce an “exact-match search” beta, which essentially followed the ideas above and was available for allow-listed repositories and organizations. We projected around a 1.3x increase in Elasticsearch resource usage for this index. The experience from the limited beta was very illuminating, but it proved too difficult to balance the additional resource requirements with ongoing growth of the index. In addition, even after the tokenization improvements, there were still numerous unsupported use cases (like substring searches and regular expressions) that we saw no path towards. Ultimately, exact-match search was sunset in just over half a year.

Project Blackbird

Actually, a major factor in pausing investment in exact-match search was a very promising research prototype search engine, internally code-named Blackbird. The project had been kicked off in early 2020, with the goal of determining which technologies would enable us to offer code search features at GitHub scale, and it showed a path forward that has led to the technology preview we launched last week.

Let’s recall our ambitious objectives: comprehensively index all source code on GitHub, support incremental indexing and document deletion, and provide lightning-fast exact-match and regex searches (specifically, a p95 of under a second for global queries, with correspondingly lower targets for org-scoped and repo-scoped searches). Do all this without using substantially more resources than the existing Elasticsearch cluster. Integrate other sources of rich code intelligence information available on GitHub. Easy, right?

We found that no off-the-shelf code indexing solution could satisfy those requirements. Russ Cox’s trigram index for code search only stores document IDs rather than positions in the posting lists; while that makes it very space-efficient, performance degrades rapidly with a large corpus size. Several successor projects augment the posting lists with position information or other data; this comes at a large storage and RAM cost (Zoekt reports a typical index size of 3.5x corpus size) that makes it too expensive at our scale. The sharding strategy is also crucial, as it determines how evenly distributed the load is. And any significant per-repo overhead becomes prohibitive when considering scaling the index to all repositories on GitHub.

In the end, Blackbird convinced us to go all-in on building a custom search engine for code. Written in Rust, it creates and incrementally maintains a code search index sharded by Git blob object ID; this gives us substantial storage savings via deduplication and guarantees a uniform load distribution across shards (something that classic approaches sharding by repo or org, like our existing Elasticsearch cluster, lack). It supports regular expression searches over document content and can capture additional metadata—for example, it also maintains an index of symbol definitions. It meets our performance goals: while it’s always possible to come up with a pathological search that misses the index, it’s exceptionally fast for “real” searches. The index is also extremely compact, weighing in at about ⅔ of the (deduplicated) corpus size.

One crucial realization was that if we want to index all code on GitHub into a single index, result scoring and ranking are absolutely critical; you really need to find useful documents first. Blackbird implements a number of heuristics, some code-specific (ranking up definitions and penalizing test code), and others general-purpose (ranking up complete matches and penalizing partial matches, so that when searching for thread an identifier called thread will rank above thread_id, which will rank above pthread_getname_np). Of course, the repository in which a match occurs also influences ranking. We want to show results from popular open-source repositories before a random match in a long-forgotten repository created as a test.

All of this is very much a work in progress. We are continuously tuning our scoring and ranking heuristics, optimizing the index and query process, and iterating on the query language. We have a long list of features to add. But we want to get what we have today into the hands of users, so that your feedback can shape our priorities.

We have more to share about the work we’re doing to enhance developer productivity at GitHub, so stay tuned.

The shoulders of giants

Modern software development is about collaboration and about leveraging the power of open source. Our new code search is no different. We wouldn’t have gotten anywhere close to its current state without the excellent work of tens of thousands of open source contributors and maintainers who built the tools we use, the libraries we depend on, and whose insightful ideas we could adopt and develop. A small selection of shout-outs and thank-yous:

- The communities of the languages and frameworks we build on: Rust, Go, and React. Thanks for enabling us to move fast.

- @BurntSushi: we are inspired by Andrew’s prolific output, and his work on the

regexandaho-corasickcrates in particular has been invaluable to us. - @lemire’s work on fast bit packing is integral to our design, and we drew a lot of inspiration from his optimization work more broadly (especially regarding the use of SIMD). Check out his blog for more.

- Enry and Tree-sitter, which power Blackbird’s language detection and symbol extraction, respectively.

Tags:

Written by

Related posts

How GitHub engineers tackle platform problems

Our best practices for quickly identifying, resolving, and preventing issues at scale.

GitHub Issues search now supports nested queries and boolean operators: Here’s how we (re)built it

Plus, considerations in updating one of GitHub’s oldest and most heavily used features.

Design system annotations, part 2: Advanced methods of annotating components

How to build custom annotations for your design system components or use Figma’s Code Connect to help capture important accessibility details before development.