Code scanning and Ruby: turning source code into a queryable database

A deep dive into how GitHub adds support for new languages to CodeQL.

We recently added beta support for Ruby to the CodeQL engine that powers GitHub code scanning, as part of our efforts to make it easier for developers to build and ship secure code. Ruby support is particularly exciting for us, since GitHub itself is a Ruby on Rails app. Any improvements we or the community make to CodeQL’s vulnerability detection will help secure our own code, in addition to helping Ruby’s open source ecosystem.

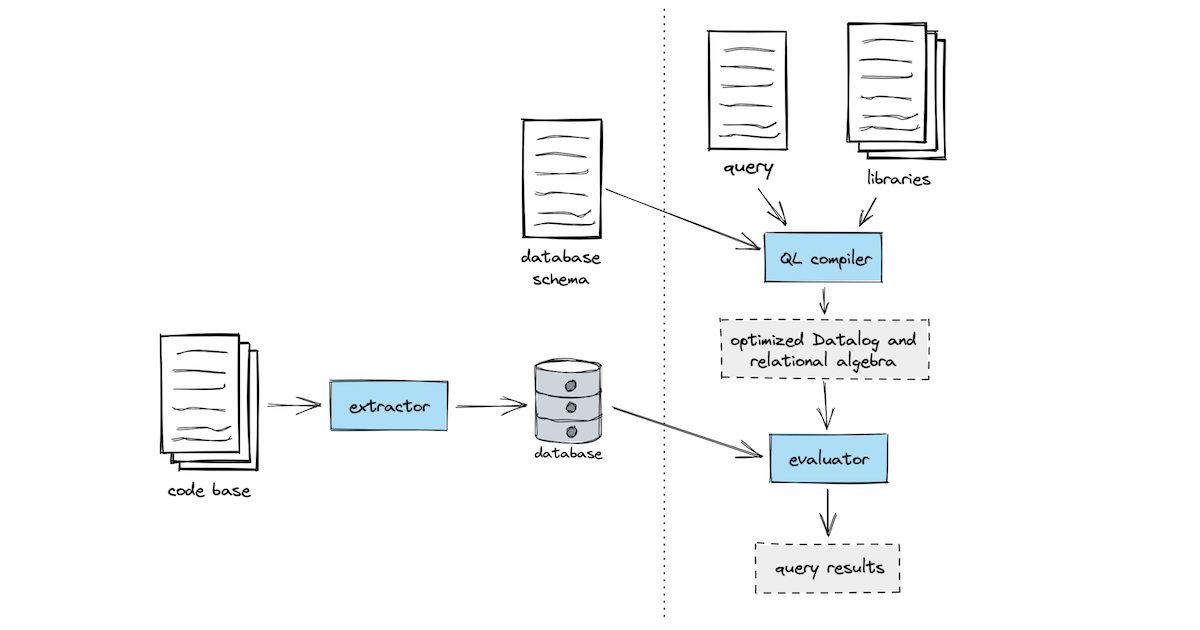

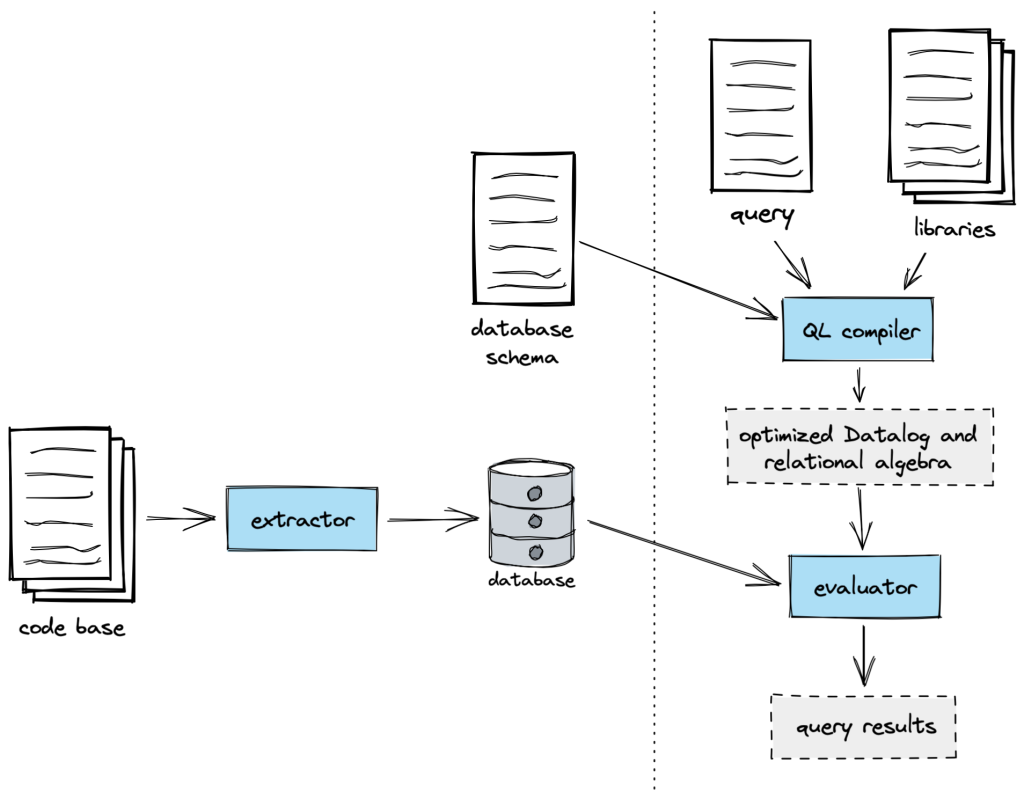

CodeQL’s static analysis works by running queries over a database representation of a program. The following diagram gives a high-level overview of the process:

While there’s plenty I’d love to tell you about how we write queries, and about our rich set of analysis libraries, I’m going to focus in this post on how we build those databases: how we’ve typically done it for other languages and how we did things a little differently for Ruby.

Introducing the extractor

If you want to be able to create databases for a new language in CodeQL, you need to write an extractor. An extractor is a tool that:

- parses the source code to obtain a parse tree,

- converts that parse tree into a relational form, and

- writes those relations (database tables) to disk.

You also need to define a schema for the database produced by your extractor. The schema specifies the names of tables in the database and the names and types of columns in each table.

Let’s visit parse trees, parsers, and database schemas in more detail.

Parse trees

Source code is just text. If you’re writing a compiler, performing static analysis, or even just doing syntax highlighting, you want to convert that text to a structure that is easier to work with. The structure we use is a parse tree, also known as a concrete syntax tree.

Here’s a friendly little Ruby program that prints a couple of greetings:

puts("Hello", "Ahoy")

The program contains three expressions: the string literals "Hello" and "Ahoy" and a call to a method named puts. I could draw a minimal parse tree for the program like this:

Compilers and static analysis tools like CodeQL often convert the parse tree into a simpler format known as an abstract syntax tree (AST), so-called because it abstracts away some of the syntactic details that do not affect the meaning of the program, such as comments, whitespace, and parentheses. If I wanted – and if I assume that puts is a method built into the language – I could write a simple interpreter that executes the program by walking the AST.

For CodeQL, the Ruby extractor stores the parse tree in the database, since those syntactic details can sometimes be useful. We then provide a query library to transform this into an AST, and most of our queries and query libraries build on top of that transformed AST, either directly or after applying further transformations (such as the construction of a data-flow graph).

Choosing a parser

For each language we’ve supported so far, we’ve used a different parser, choosing the one that gives the best performance and compatibility across all the codebases we want to analyze. For example, our C# extractor parses C# source code using Microsoft’s open source Roslyn compiler, while our JavaScript extractor uses a parser that was derived from the Acorn project.

For Ruby, we decided to use tree-sitter and its Ruby parser. Tree-sitter is a parser framework developed by our friends in GitHub’s Semantic Code Team and is the technology underlying the syntax highlighting and code navigation (jump-to-definition) features on GitHub.com.

Tree-sitter is fast, has excellent error recovery, and provides bindings for several languages (we chose to use the Rust bindings, which are particularly pleasant). It has parsers not only for Ruby, but for most other popular languages, and provides machine-readable descriptions of each language’s grammar. These opened up some exciting possiblities for us, which I’ll describe later.

In practice, the parse tree produced by tree-sitter for our example program is a little more complicated than the diagram above (for one thing, string literal nodes have child nodes in order to support string interpolation). If you’re curious, you can go to the tree-sitter playground, select Ruby from the dropdown, and try it for yourself.

Handling ambiguity

Ruby has a flexible syntax that delights (most of) the people who program in it. The word ‘elegant’ gets thrown around a lot, and Ruby programs often read more like English prose than code. However, this elegance comes at a significant cost: the language is ambiguous in several places, and dealing with it in a parser creates a lot of complexity.

Ruby is simple in appearance, but is very complex inside, just like our human body.

Matz’s Ruby Interpreter (MRI) is the canonical implementation of the Ruby language, and its parser is implemented in the file parse.y (from which Bison generates the actual parser code). That file is currently 14,000 lines long, which should give you a sense of the complexity involved in parsing Ruby.

One interesting example of Ruby’s ambiguity occurs when parsing an unadorned identifier like foo. If it had parentheses – foo() – we could parse it unambiguously as a method call, but parentheses are optional in Ruby. Is foo a method call with zero arguments, or is it a variable reference? Well, that ultimately depends on whether a variable named foo is in scope. If so, it’s a variable reference; otherwise, we can assume it’s a call to a method named foo.

What type of parse-tree node should the parser return in this case? The parser does not track which variables are in scope, so it cannot decide. The way tree-sitter and the extractor handle this is to emit an identifier node rather than a call node. It’s only later, as we evaluate queries, that our AST library builds a control-flow graph of the program and uses that to decide whether the node is a method call or a variable reference.

Representing a parse tree in a relational database

While the parse trees produced by most parser libraries typically represent nodes and edges in the tree as objects and pointers, CodeQL uses a relational database. Going back to the simple diagram above, I can attempt to convert it to relational form. To do that, I should first define a schema (in a simplified version of CodeQL’s schema syntax):

expressions(id: int, kind: int)

calls(expr_id: int, name: string)

call_arguments(call_id: int, arg_id: int, arg_index: int)

string_literals(expr_id: int, val: string)

The expressions table has a row for each expression in the program. Each one is given a unique id, a primary key that I can use to reference the expression from other tables. The kind column defines what kind of expression it is. I can decide that a value of 1 means the expression is a method call, 2 means it’s a string literal, and so on.

The calls table has a row for each method call, and it allows me to specify data that is specific to call expressions. The first column is a foreign key, i.e. the id of the corresponding entry in the expressions table, while the second column specifies the name of the method. You might have expected that I’d add columns for the call’s arguments, but calls can take any number of arguments, so I wouldn’t know how many columns to add. Instead, I put them in a separate call_arguments table.

The call_arguments table, therefore, has one row per argument in the program. It has three columns: one that’s a reference to the call expression, another that’s a reference to an argument, and a third that specifies the index, or number, of that argument.

Finally, the string_literals table lets me associate the literal text value with the corresponding entry in the expressions table.

Populating our small database

Now that I’ve defined my schema, I’m going to manually populate a matching database with rows for my little greeting program:

expressions

| id | kind |

|---|---|

| 100 | 1 (call) |

| 101 | 2 (string literal) |

| 102 | 2 (string literal) |

calls

| expr_id | name |

|---|---|

| 100 | “puts” |

call_arguments

| call_id | arg_id | arg_index |

|---|---|---|

| 100 | 101 | 0 |

| 100 | 102 | 1 |

string_literals

| expr_id | val |

|---|---|

| 101 | “Hello” |

| 102 | “Ahoy” |

Suppose this were a SQL database, and I wanted to write a query to find all the expressions that are arguments in calls to the puts method. It might look like this:

SELECT call_arguments.arg_id

FROM call_arguments

INNER JOIN calls ON calls.expr_id = call_arguments.call_id

WHERE calls.name = "puts";

In practice, we don’t use SQL. Instead, CodeQL queries are written in the QL language and evaluated using our custom database engine. QL is an object-oriented, declarative logic-programming language that is superficially similar to SQL but based on Datalog. Here’s what the same query might look like in QL:

from MethodCall call, Expr arg

where

call.getMethodName() = "puts" and

arg = call.getAnArgument()

select arg

MethodCall and Expr are classes that wrap the database tables, providing a high-level, object-oriented interface, with helpful predicates like getMethodName() and getAnArgument().

Language-specific schemas

The toy database schema I defined might look as though it could be reused for just about every programming language that has string literals and method calls. However, in practice, I’d need to extend it to support some features that are unique to Ruby, such as the optional blocks that can be passed with every method call.

Every language has these unique quirks, so each one GitHub supports has its own schema, perfectly tuned to match that language’s syntax. Whereas my toy schema had only two kinds of expression, our JavaScript and C/C++ schemas, for example, both define over 100 kinds of expression. Those schemas were written manually, being refined and expanded over the years as we made improvements and added support for new language features.

In contrast, one of the major differences in how we approached Ruby is that we decided to generate the database schema automatically. I mentioned earlier that tree-sitter provides a machine-readable description of its grammar. This is a file called node-types.json, and it provides information about all the nodes tree-sitter can return after parsing a Ruby program. It defines, for example, a type of node named binary, representing binary operation expressions, with fields named left, operator, and right, each with their own type information. It turns out this is exactly the kind of information we want in our database schema, so we built a tool to read node-types.json and spit out a CodeQL database schema.

You can see the schema it produces for Ruby here.

Bridging the gap

I’ve talked about how the parser gives us a parse tree, and how we could represent that same tree in a database. That’s really the main job of an extractor – massaging the parse tree into a relational database format. How easy or difficult that is depends a lot on how similar those two structures look. For some languages, we defined our database schema to closely match the structure (and naming scheme) of the tree produced by the parser. That is, there’s a high level of correspondence between the parser’s node names and the database’s table names. For those languages, an extractor’s job is fairly simple. For other languages, where we perhaps decided that the tree produced by the parser didn’t map nicely to our ideal database schema, we have to do more work to convert from one to the other.

For Ruby, where we wrote a schema-generator to automatically translate tree-sitter’s description of its node types, our extractor’s job is quite simple: when tree-sitter parses the program and gives us those tree nodes, we can perform the same translations and automatically produce a database that conforms to the schema.

Language independence

This extraction process is not only straightforward, it’s also completely language-agnostic. That is, the process is entirely mechanical and works for any tree-sitter grammar. Our schema-generator and extractor know nothing about Ruby or its syntax – they simply know how to translate tree-sitter’s node-types.json. Since tree-sitter has parsers for dozens of languages, and they all have corresponding node-types.json files, we have effectively written a pair of tools that could produce CodeQL databases for any of them.

One early benefit of this approach came when we realized that, to provide comprehensive analysis of Ruby on Rails applications, we’d also need to parse the ERB templates used to render Rails views. ERB is a distinct language that needs to be parsed separately and extracted into our database. Thankfully, tree-sitter has an existing ERB parser, so we simply had to point our tooling at that node-types.json file as well, and suddenly we had a schema-generator and extractor that could handle both Ruby and ERB.

As an aside, the ERB language is quite simple, mostly consisting of tags that differentiate between the parts of the template that are text and the parts that are Ruby code. The ERB parser only cares about those tags and doesn’t parse the Ruby code itself. This is because tree-sitter provides an elegant feature that will return a series of byte offsets delimiting the parts that are Ruby code, which we can then pass on to the Ruby parser. So, when we extract an ERB file, we parse and extract the ERB parse tree first, and then perform a second pass that parses and extracts the Ruby parse tree, ignoring the text parts of the template file.

Of course, this automated approach to extraction does come with some tradeoffs, since it involves deferring some work from the extraction stage to the analysis stage. Our QL AST library, for example, has to do more work to transform the parse tree into a user-friendly AST representation, compared with some languages where the AST classes are thin wrappers over database tables. And if we look at our existing extractor for C#, for example, we see that it gets type information from the Roslyn compiler frontend, and stores it in the database. Our language-independent tooling, meanwhile, does not attempt any kind of type analysis, so if we wanted to use it on a static language, we’d have to implement that type analysis ourselves in QL.

Nonetheless, we are excited about the possibilities this language-independent tooling opens up as we look to expand CodeQL analysis to cover more languages in the future, especially for dynamic languages. There’s a lot more to analyzing a language than producing a database, but getting that part working with little to no effort should be a major time-saver.

github/github: so good, they named it twice

github/github is the Ruby on Rails application that powers GitHub.com, and it’s rather large. I’ve heard it said that it’s one of the largest Rails apps in the world. That means it’s an excellent stress test for our Ruby extractor.

It was actually the second Ruby program we ever extracted (the first was “Hello, World!”).

Of course, extraction wasn’t quite perfect. We observed a number of parser errors that we fixed upstream in the tree-sitter-ruby project, so our work not only resulted in improved Ruby code-viewing on GitHub.com, but also improvements in the other projects that use tree-sitter, such as Neovim’s syntax highlighting.

It was also a useful benchmark in our goal of making extraction as fast as possible. CodeQL can’t start doing the valuable work of analyzing for vulnerabilities until the code has been extracted and a database produced, so we wanted to make this as fast as possible. It certainly helps that tree-sitter itself is fast, and that our automatic translation of tree-sitter’s parse tree is simple.

The biggest speedup – and where our choice to implement the extractor in Rust really helped – was in implementing multi-threaded extraction. With the design we chose for the Ruby extractor, the work it performs on each source file is completely independent of any other source file. That makes extraction an embarrassingly parallel problem, and using the Rayon library meant we barely had to change any code at all to take advantage of that. We simply changed a for loop to use a Rayon parallel-iterator, and it handled the rest. Suddenly extraction got eight times faster on my eight-core laptop.

CodeQL now runs on every pull request against github/github, using a 32-core Actions runner, where Ruby extraction takes just 15 seconds. The core engine still has to perform ‘database finalization’ after the extractor finishes, and this takes a little longer (it parallelizes, but not “embarrassingly”), but we are extremely pleased with the extractor’s performance given the size of the github/github codebase, and given our experiences with extracting other languages.

We should be in a good place to accommodate all our users’ Ruby codebases, large and small.

Try it out

I hope you’ve enjoyed this dive into the technical details of extracting CodeQL databases. If you’d like to try CodeQL on your Ruby projects, please refer to the blog post announcing the Ruby public beta, which contains several handy links to help you get started.

Tags:

Written by

Related posts

How to catch GitHub Actions workflow injections before attackers do

Strengthen your repositories against actions workflow injections — one of the most common vulnerabilities.

Modeling CORS frameworks with CodeQL to find security vulnerabilities

Discover how to increase the coverage of your CodeQL CORS security by modeling developer headers and frameworks.

CVE-2025-53367: An exploitable out-of-bounds write in DjVuLibre

DjVuLibre has a vulnerability that could enable an attacker to gain code execution on a Linux Desktop system when the user tries to open a crafted document.