What the heck is MCP and why is everyone talking about it?

Everyone’s talking about MCP these days when it comes to large language models (LLMs)—here’s what you need to know.

Everyone’s talking about MCP these days when it comes to large language models (LLMs)—here’s what you need to know.

We’ve tested integrating OpenAI o1-preview with GitHub Copilot. Here’s a first look at where we think it can add value to your day to day.

We are enabling the rise of the AI engineer with GitHub Models–bringing the power of industry leading large and small language models to our more than 100 million users directly on GitHub.

Learn how your organization can customize its LLM-based solution through retrieval augmented generation and fine-tuning.

Learn how we’re experimenting with generative AI models to extend GitHub Copilot across the developer lifecycle.

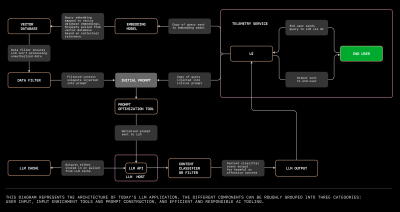

Here’s everything you need to know to build your first LLM app and problem spaces you can start exploring today.

Explore how LLMs generate text, why they sometimes hallucinate information, and the ethical implications surrounding their incredible capabilities.

Open source generative AI projects are a great way to build new AI-powered features and apps.

The team behind GitHub Copilot shares its lessons for building an LLM app that delivers value to both individuals and enterprise users at scale.

We’re launching the GitHub Copilot Trust Center to provide transparency about how GitHub Copilot works and help organizations innovate responsibly with generative AI.

Prompt engineering is the art of communicating with a generative AI model. In this article, we’ll cover how we approach prompt engineering at GitHub, and how you can use it to build your own LLM-based application.

Developers behind GitHub Copilot discuss what it was like to work with OpenAI’s large language model and how it informed the development of Copilot as we know it today.

With a new Fill-in-the-Middle paradigm, GitHub engineers improved the way GitHub Copilot contextualizes your code. By continuing to develop and test advanced retrieval algorithms, they’re working on making our AI tool even more advanced.

Build what’s next on GitHub, the place for anyone from anywhere to build anything.

Last chance: Save $700 on your IRL pass to Universe and join us on Oct. 28-29 in San Francisco.